The Future AI Infrastructure: A Leader’s Perspective

The AI data infrastructure landscape is undergoing a significant transformation. I see six critical areas that will define the future of AI infrastructure

As leading AI architecture at Hilti, I have a unique vantage point on the rapidly evolving landscape of AI infrastructure. In this article, I’ll share my insights on the key trends and innovations that I believe will shape the future of AI infrastructure.

The Evolving AI Data Infrastructure Landscape

The AI data infrastructure landscape is undergoing a significant transformation. I see six critical areas that will define the future of AI infrastructure:

- Sources Data Integrations

- Data Processing & ETL

- Big Data Storage

- Model Training

- Inference Prediction

- Data Services

1. Sources Data Integrations

The future of AI will be built on diverse and high-quality data sources. I anticipate a significant shift towards:

- Synthetic Data: As AI models become more complex, the demand for large, diverse datasets will outpace our ability to collect real-world data. Synthetic data will play a crucial role in filling this gap, especially for edge cases and scenarios where real data is scarce or sensitive.

- Internet of Things (IoT) and Edge Data: With the proliferation of IoT devices, we’ll see an explosion of real-time, sensor-generated data. This will drive innovations in edge computing and distributed AI systems.

- Multimodal Data: Future AI systems will need to seamlessly integrate data from various modalities — text, images, audio, video, and more. This will require new approaches to data collection and preprocessing.

2. Data Processing & ETL

The data pipeline landscape is undergoing a dramatic transformation, driven by increasing data volumes and the need for faster, more efficient processing. Here’s how I see this space evolving:

Automated Data Discovery and Integration

The future of data integration lies in automation and intelligence. Modern platforms are emerging with capabilities to:

- Automatically scan and catalog data sources across an organization

- Use AI to understand data semantics and relationships

- Suggest optimal integration patterns based on data types and use cases

- Provide automated data quality checks and validation

- Generate documentation and lineage automatically

Real-time Processing at Scale

As businesses become more real-time, data processing must evolve to match:

- Stream processing becoming the default rather than batch processing

- Edge computing playing a crucial role in reducing latency

- Serverless architectures enabling elastic scaling for variable workloads

- Advanced stream processing frameworks handling complex event processing

- Real-time ML model updates based on streaming data

Intelligent Data Cleaning and Preprocessing

AI is revolutionizing how we prepare data:

- Automated anomaly detection and correction

- Smart schema mapping and transformation suggestions

- Automated feature engineering for ML pipelines

- Intelligent data type detection and conversion

- Automated data quality assessment and improvement recommendations

Low-Code/No-Code Revolution

This is perhaps the most significant shift in data engineering:

- Visual pipeline builders replacing traditional coding

- Drag-and-drop interfaces for complex transformations

- Pre-built connectors for common data sources

- Automated code generation for optimized performance

- Citizen data engineers emerging as a new role

- Built-in best practices and governance

Emerging Trends

Several new developments are shaping the future:

- Declarative Data Pipelines: Describing what needs to be done rather than how to do it

- DataOps Integration: Bringing DevOps practices to data pipeline development

- Unified Batch and Streaming: Single pipeline architecture for both batch and real-time processing

- AI-Powered Optimization: Automatic pipeline optimization based on usage patterns

- Collaborative Data Preparation: Team-based approaches to data transformation with built-in version control

3. Big Data Storage

The evolution of AI storage systems is being driven by the need for greater scalability, privacy, and intelligence. Previously I have described how to optimize data storage in data planforms Data storage . Here’s a detailed look at key storage trends and their implementing technologies:

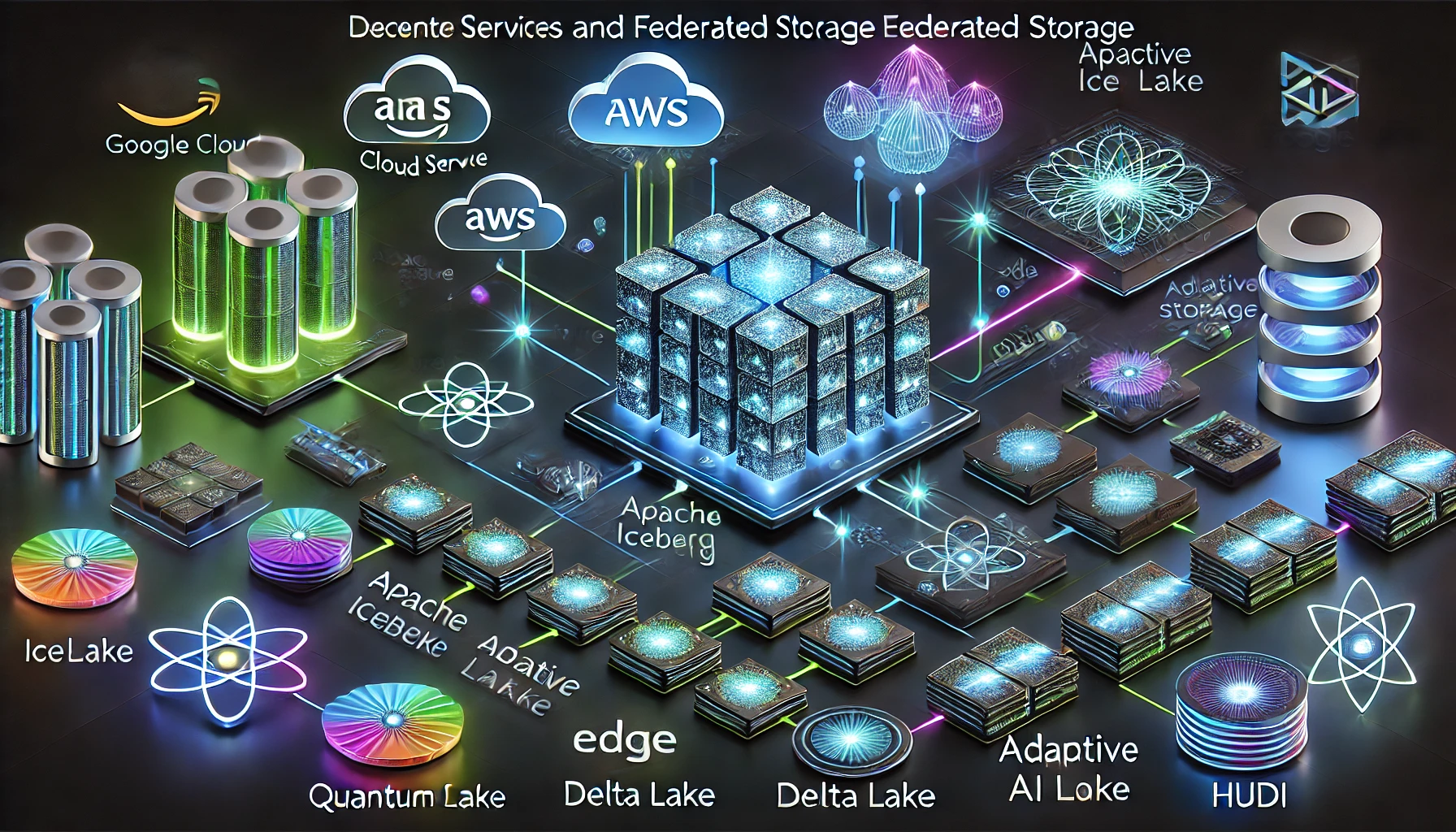

Decentralized and Federated Storage

Modern organizations need storage solutions that balance accessibility with privacy and compliance:

Technologies and Implementations:

- Data Lakehouse Architecture:

- Apache Iceberg: Open table format providing ACID transactions, schema evolution, and time travel

- Delta Lake: Similar to Iceberg, offers versioning and ACID compliance

- Apache Hudi: Provides upserts and incremental processing capabilities

Cloud Implementation:

- AWS: Amazon S3 + AWS Lake Formation for governance

- Azure: Azure Data Lake Storage Gen2 with Azure Synapse

- Google Cloud: Cloud Storage + BigLake

Privacy-Preserving Features:

- Data encryption at rest and in transit

- Fine-grained access controls

- Data residency controls for regulatory compliance

- Audit logging and compliance reporting

Intelligent Data Organization

Modern storage systems are becoming smarter about how they organize and serve data:

Table Formats and Processing:

- Column-oriented storage:

- Parquet for analytical workloads

- ORC for high-performance reading

- Partition management:

- Dynamic partitioning based on access patterns

- Auto-compaction of small files

- Intelligent bucketing strategies

Cloud Solutions:

- AWS: Amazon S3 Intelligent-Tiering, S3 Analytics

- Azure: Azure Storage lifecycle management

- Google Cloud: Object Lifecycle Management

Adaptive Storage Systems

Storage systems that automatically optimize based on workload patterns:

Key Features:

- Auto-tiering:

- Hot data in high-performance storage

- Warm data in standard storage

- Cold data in archive storage

Implementation Technologies:

- AWS:

- S3 Intelligent-Tiering

- EFS Intelligent-Tiering

- DynamoDB adaptive capacity

- Azure:

- Azure StorSimple

- Azure Blob Storage auto-tiering

- Google Cloud:

- Cloud Storage object lifecycle management

- File store Enterprise auto-scaling

Performance Optimization

Modern storage systems employ various techniques for optimal performance:

Caching and Buffering:

- In-memory caching:

- Redis for hot data

- Apache Ignite for distributed caching

- SSD caching:

- Local NVMe for frequently accessed data

- Elastic block storage optimization

Cloud Implementation:

- AWS: ElastiCache, DAX for DynamoDB

- Azure: Azure Cache for Redis

- Google Cloud: Memorystore

Data Catalog and Governance

Modern storage systems require robust cataloging and governance:

Metadata Management:

- AWS: AWS Glue Data Catalog

- Azure: Azure Purview

- Google Cloud: Data Catalog

Governance Features:

- Automated data discovery and classification

- Data lineage tracking

- Access control and audit logging

- Compliance monitoring and reporting

Future Trends

Emerging storage technologies that will shape the future:

Quantum Storage:

- Currently experimental but promising for specific use cases

- Potential for massive parallel data processing

- Early research in quantum memory systems

Edge Storage:

- Distributed edge caching

- Local processing and storage optimization

- Edge-to-cloud data synchronization

Hybrid Storage:

- Seamless integration between on-premises and cloud storage

- Intelligent data placement across hybrid environments

- Unified management and governance

4. Model Training

AI model training will evolve in several key ways:

- Automated Machine Learning (AutoML) at Scale: AutoML tools will become more sophisticated, enabling the automated design and optimization of complex neural network architectures.

- Continuous Learning Systems: We’ll move away from the traditional train-deploy cycle towards systems capable of continuous learning and adaptation in production environments.

- Energy-Efficient Training: As AI models grow larger, there will be a strong focus on developing energy-efficient training methods to reduce the environmental impact of AI.

5. Inference

The future of AI inference will be shaped by:

- Edge AI: More AI computation will happen at the edge, closer to data sources, driving innovations in hardware and software optimized for edge deployment.

- Neuromorphic Computing: We may see the rise of neuromorphic computing systems that more closely mimic the human brain, potentially offering significant efficiency gains for certain types of AI workloads.

- AI-Specific Hardware: The development of specialized AI hardware will accelerate, with new architectures optimized for specific types of AI computations.

6. Data Services

As AI systems become more prevalent and critical, data services will evolve to meet new challenges:

- AI Governance and Explainability: Tools and frameworks for ensuring the transparency, fairness, and accountability of AI systems will become essential.

- Privacy-Preserving AI: Techniques like federated learning, differential privacy, and homomorphic encryption will become standard to protect data privacy in AI systems.

- Automated Compliance: AI-powered tools will emerge to automate compliance with evolving data protection and AI regulations.

Leave a Reply